Vulnerability of Person Re-Identification Models to Metric Adversarial Attacks

Background

When we think of smart supervision of camera networks, we think of tracking objects or people through different views of the cameras. This is called Person re-identification (re-ID). To do this, we employ deep neural networks to find and locate people on images by metric learning. We learn and project images of people on a feature space in which similar images (images from the same person) are close according to a given distance.

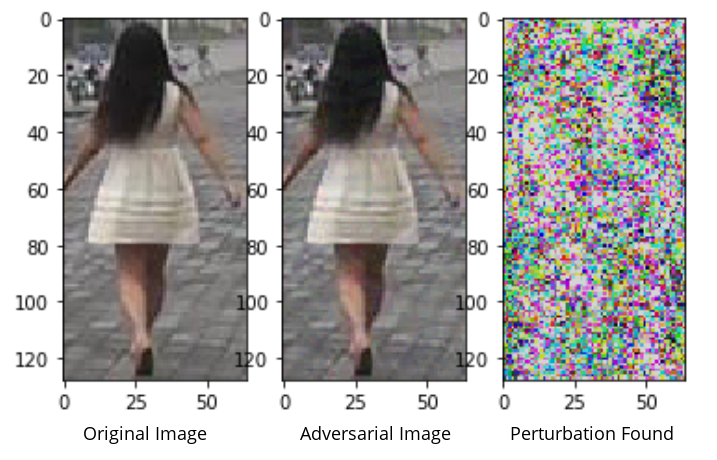

But deep neural networks are subject to adversarial attacks, human-imperceptible perturbations maliciously generated to fool a neural network. They are extensively studied for the task of image classification, but they can also appear with metric learning. Attacking a metric consists in altering the distances between the feature of an attacked image and those of reference images (guides).

When we face state-of-the-art person re-ID models with adversarial examples, they fail to correctly recognize a person. This represent a security breach, which is all the more dangerous as it is impossible to detect for a human operator.

TL;DR

We investigate different possible attacks depending on the number and type of guides available. Two particularly effective attacks stand out:

- Self Metric Attack (SMA), a strong attack that does not need any image apart from the attacked image.

- Furthest-Negative Attack (FNA), an even more effective attack that makes full use of a set of images.

To defend against these attacks, we adapt the adversarial training protocol for metric learning.

Person Re-Identification

First of all, what is Person Re-Identification ?

Person Re-Identification aims to find a given person accross multiple images. In practice, the objective is to rank a gallery of images from most similar to least similar to a query image.

It is a key problem for smart supervision of a camera network. It can be viewed as an open-set ranking (or retrieval) problem. Which means that there are different classes between training and testing. So unlike closed-set problem, we can’t use the class information learned at training time for the evaluation.

Concretely, this means that for each query, we want to rank the gallery such that the first images have the same identity than the query.

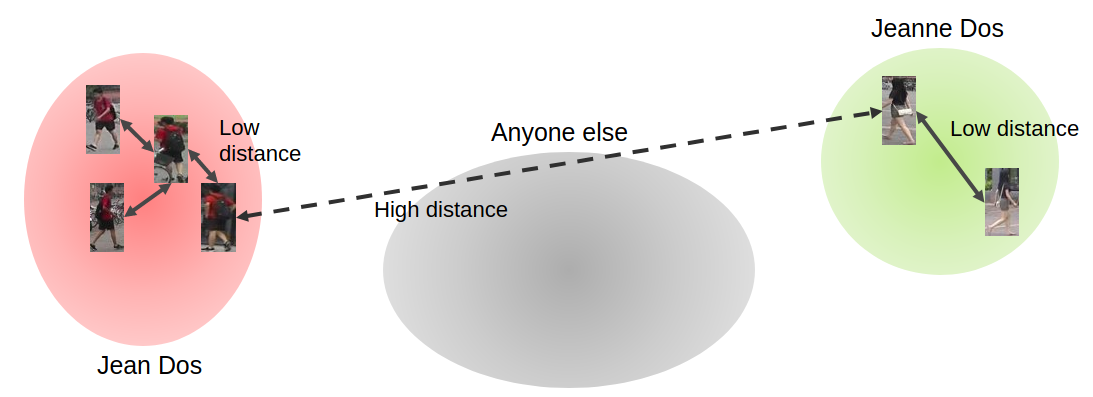

For example, let’s take Jean and Jeanne Dos, the french cousins of the Doe family. For Jean, we rank the gallery such that Jean appears in the first images. Then we do the same thing for Jeanne. Note that the ranking is different, since it is based on the similarity with Jeanne and not with Jean.

So how can we solve this problem in practice ? For that, we use Metric Learning.

Metric Learning

The idea of Metric Learning is: Given a distance, learn an embedding space in which similar images have a low distance, and dissimilar images have a high distance.

In our case, images of the same person, so images with the same identity, have a low distance and images of different persons have a high distance. Usually, we use a L2 distance or a Cosine Similarity as our metric.

State-of-the-art Metric Attacks

So now, we want to know how can we attack a metric learning system ? Can we use the same adversarial attacks as for classification problems ?

Classification attacks

For classification problems, the attacks are using the class information learned during training. The models are attacked at the logit level, and the objective is to change the predicted class from the proper class.

We can do this in a targeted way, where the images are classified as a specific targeted class. Or in a non-targeted way, where the images can become any other class.

However, in our case, the class information is not available.

Guides for Metric attacks

As opposed to classification, where there is a label function, that takes a single input, metric learning uses a distance function that takes two inputs. It computes the distance between two points. So to attack the metric, we need another point that we will use as a guide for the attack.

A guide can induce two kind of perturbation.

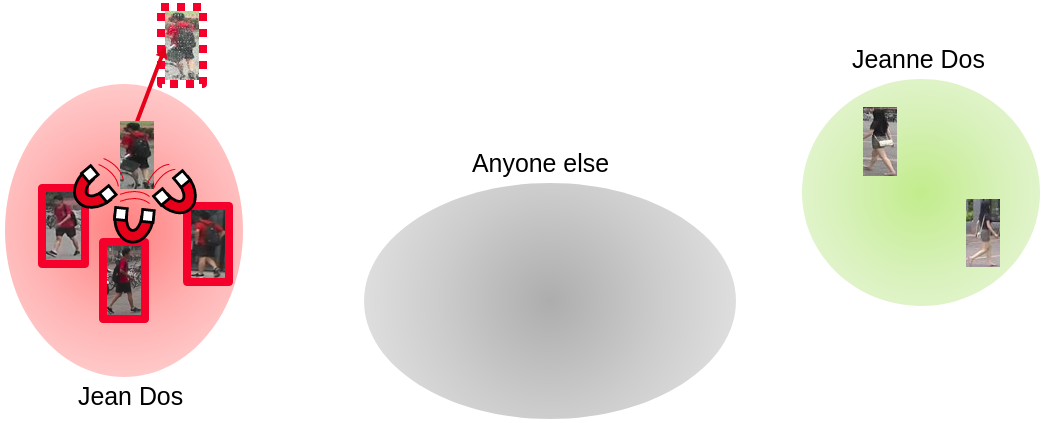

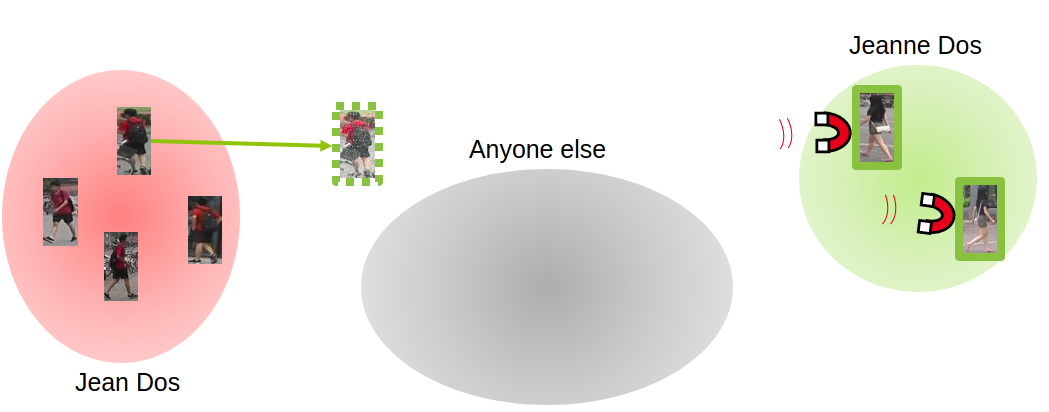

Pulling guides

The first kind is the pulling effect. They can decrease the distance and move the attacked image close to another person identity. We will call this a pulling guide.

For instance, if we want to attack an image of Jean, this can be done by using an image of Jeanne. Pulling guide attack can be viewed as a targeted metric attack.

The resulting adversarial image will have low distance with other images of Jeanne, but it can stay relatively close to the cluster of Jean. It does not imply that images of Jean will be relegated to the last rows in the ranking.

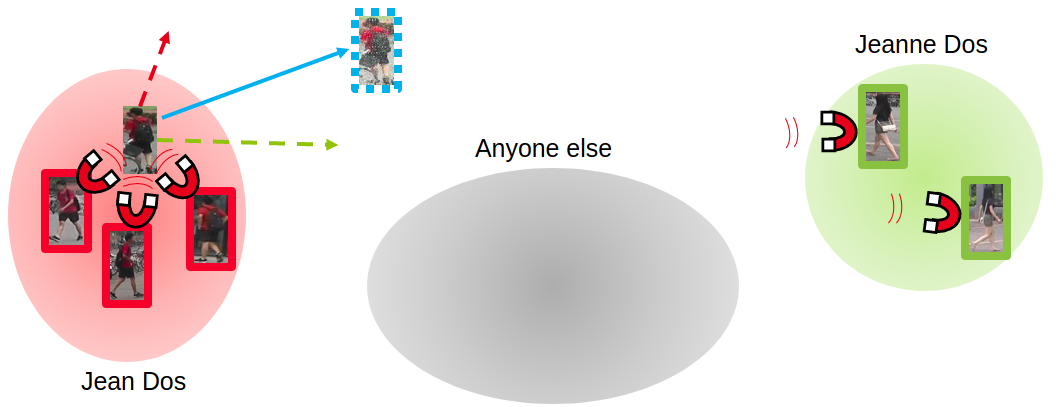

Pushing guides

The second kind of perturbation is the pushing effect. The guide can increase the distance and move the attacked image away from similar images. We will call this a pushing guide.

For instance, if we want to attack an image of Jean, this can be done by using another image of Jean. Pushing guide attack can be viewed as a non-targeted metric attack.

By increasing the distance between the adversarial image and the guide, the adversarial image moves away from all other similar images and ends up far from the initial cluster. But the image can be pushed in a direction where there is no other images. This means that a greater distance is needed to change the ranking compared to a direction where there is other cluster.

The Metric or Single Guide (SG.) FGSM/IFGSM/MIFGSM 1 are examples of pushing or pulling guide attacks.

Artificial guides

These previous metric attacks require to have access to other images, which is not always possible for an attacker. How can we attack if we don’t have a guide ? For example here, what if we have a single image of Jean that we want to attack ?

We can construct an artificial guide to emulate another image. This artificial guide can either be pushing or pulling. We called these kind of attacks self-sufficient since they do not require additional images. ODFA 2 is an example of self-sufficient attack.

Our attacks

Now I’m going to present our contributions and metric attacks.

Self Metric Attack

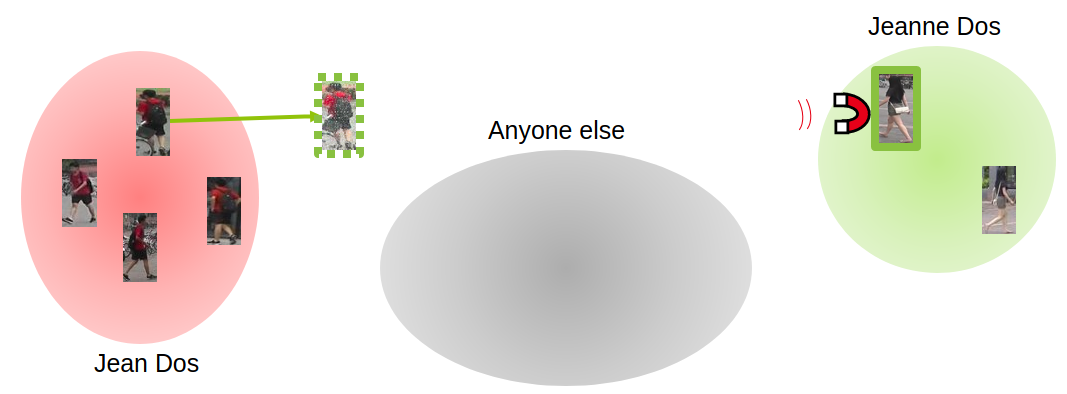

In the setting where the attacker does not have access to additional images, we proposed the Self Metric Attack (SMA) that uses the image under attack as an artificial pushing guide.

First, the attack creates a copy of the original image that we slightly move in a random direction and then move the copy away from the original image.

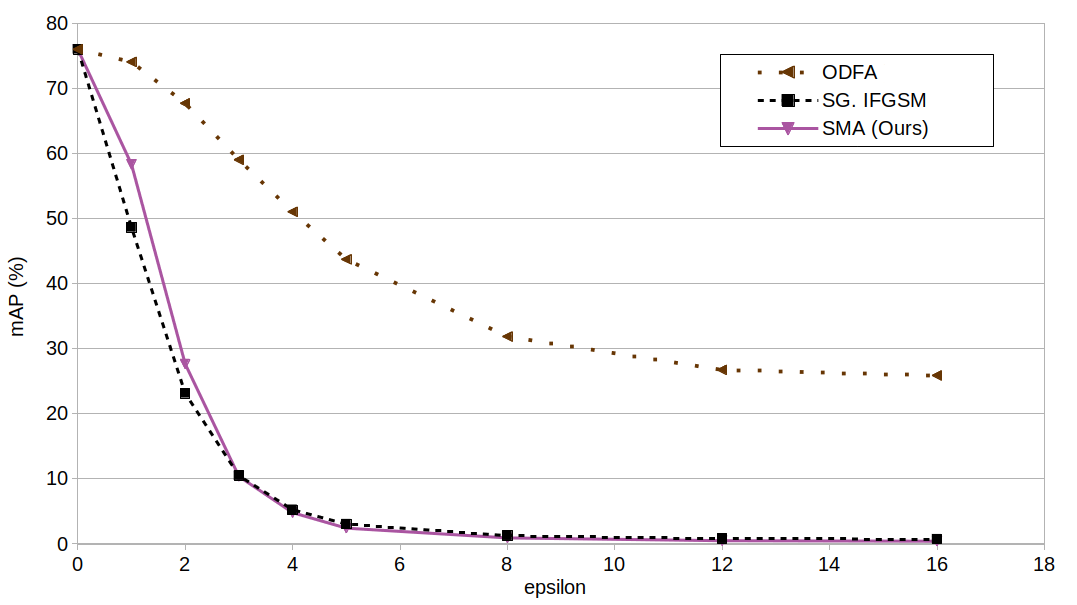

Our proposed attack has competitive performance with the state-of-the-art metric attack that uses a pushing guide, and it outperforms by a large margin the other self-sufficient attack.

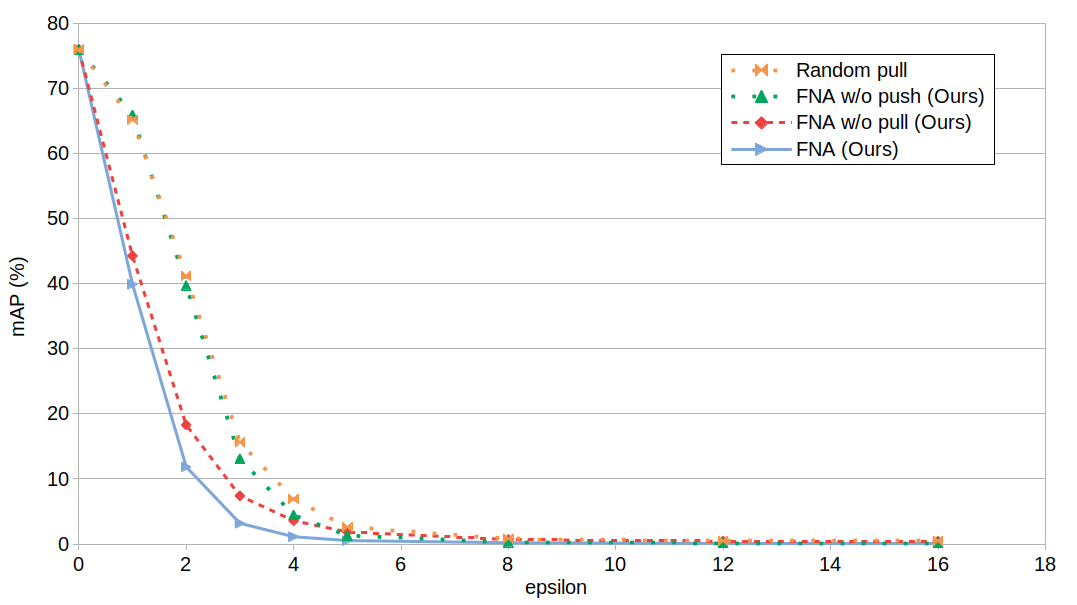

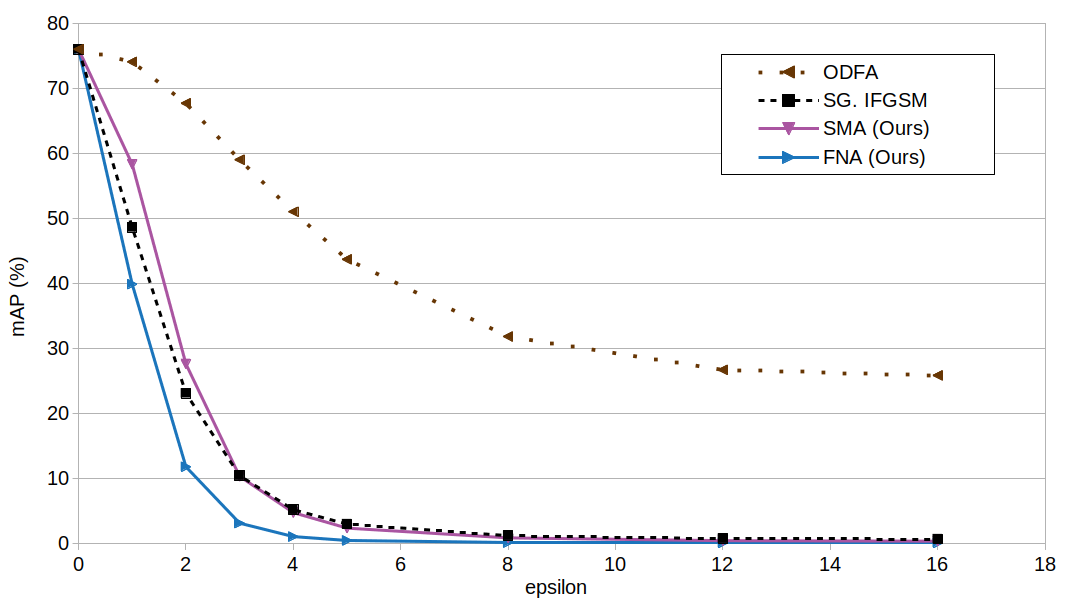

In the performance curves, a lower mean average precision (or mAP) is better for the attack.

Furthest-Negative Attack

In the other extreme, the attacker can have access to multiple images.

To have a better approximation of the best direction to move the image to, we propose to use multiple guides instead of a single one.

If these images are from the same identity than the attacked image, they will be pushing guides. This means using the other images of Jean. With multiple puching guides, he attacked image will be moved more efficiently outside of the cluster.

With images from the same but different identity than the attacked image, for instance, of Jeanne, they will be pulling guides.

With multiple pulling guides, the attacked image will move more efficiently toward the center of the targeted cluster of identity. This is important to have low distance with all the images from this identity. The adversarial image can blend more easily with this identity in the ranking.

So in the setting where additional images is not a constraint, we propose to use them all by combining pushing and pulling guides.

However for an effective combination of both, we select only the images from the furthest identity cluster to be use as pulling guides. This leads to our Furthest-Negative Attack (FNA).

Indeed, as we want the biggest change in the ranking, the attacked image has to move closer to another identity cluster while having the highest distance with its initial identity cluster. This is important to have the most impact on the ranking. Images from the pulling cluster will appear first in the ranking and similar images will appear last. This gives the most efficient direction for the perturbation.

As you can see, pulling from the furthest cluster is consistently more effective than choosing a random cluster.

We can also see that the pushing effect is more important for low perturbation, because the image has to move away from similar images. This does not always happen with a low pulling effect. The image can be pulled closer to some images from the original identity cluster.

Then, as the perturbation size increases, the pulling effect becomes more important than the pushing effect. Because, as I explained earlier, the adversarial image has to move in a direction where there is already another cluster of identity to decrease the distance needed to perturb the ranking.

Finally, a combination of both is always more effective.

When comparing our attacks with the state-of-the-art, we can see that the Self Metric Attack has competitive performance with metric attacks that depend on additional images. It is the strongest self-sufficient attack.

But overall, the Furthest-Negative Attack is the most effective metric attack.

In practice, choosing the best attack depend on the access to additional images. Without additional images, the Self Metric Attack is the best choice. But as long as we have access to at least one other image, the Furthest-Negative Attack becomes the best choice.

The Furthest-Negative Attack makes full use of all the images available.

Defending Re-Identification Models

After looking at attacking metric learning models, let’s look at defending them.

As explained earlier, metric attacks require another point to compute and attack the distance. We would like to use the equivalent of non-targeted attacks for an effective adversarial training against metric attacks.

We can use a self-sufficient attack, like the Self Metric Attack, since they create artificial guides. But stronger attacks, like the Furthest-Negative Attack, will require additional images.

Guide-Sampling Online Adversarial Training (GOAT)

So to address this problem, we propose GOAT, the Guide Sampling Online Adversarial Training, a special sampling strategy to use metric attacks in adversarial training.

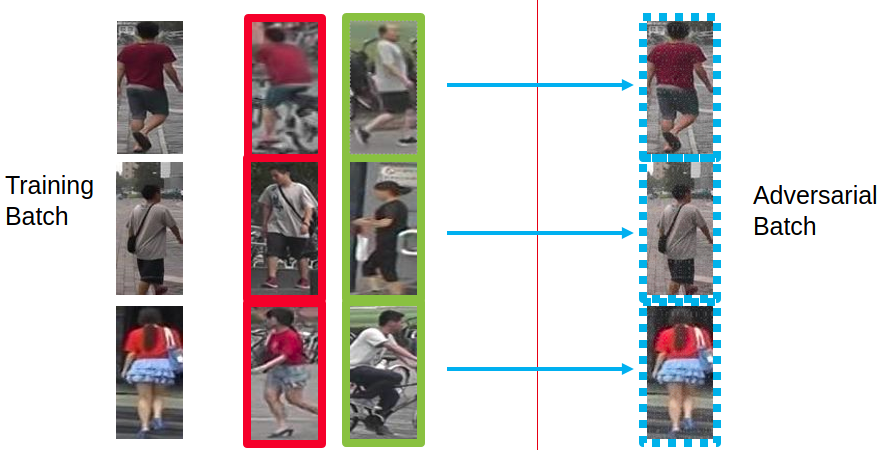

During training, for each image in a batch, we sample additional pushing and pulling guides from the training set. Then using the guides sampled for each image, we generate an adversarial batch for training.

The metric attack used for training depends on the number of pushing and pulling guides sampled. If no guides are sampled, we use the Self Metric Attack.

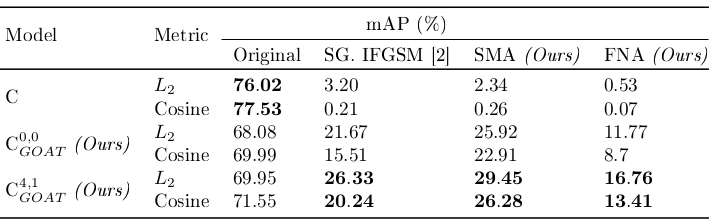

This table compares an undefended model and models defended with GOAT with no guides sampled and with 4 pushing guides and 1 pulling guide. The number of pushing or pulling guides is written in superscript.

So we can see that compared with undefended models, models defended with GOAT offers better robustness while keeping competitive performance. In the table, a higher mAP is better for robustness.

Conclusion

As Person Re-Identification is mainly used for video-surveillance, security and robustness against adversarial attacks are really important.

To attack metric learning models, we need another point (or image) that will be used as a guide. This guide can be a pushing guide to increase the distance to the initial identity. Or a pulling guide to decrease the distance with another identity cluster. If we don’t have access to additional images, we can create an artificial guide with a self-sufficient attack.

Depending on the access to available image, we proposed two metric attacks:

- The Self Metric Attack (SMA), the strongest self-sufficient attack that do not depend on additional images. It has competitive performance with attacks that require images.

- The Furthest-Negative Attack (FNA), that combines pushing and pulling from the furthest cluster. It makes full use of all images available with multiple guides.

Finally, we proposed GOAT, an extension of adversarial training to train robust metric learning models against metric attacks.